Over the past three years, our work in MOOCs has accelerated progress in knowledge about: the effective scaling and delivery of MOOC instruction; MOOC participants' motivation and persistence; and methodologies for collecting and mining massive amounts of data. We have developed online learning tools to support research and to improve teaching and learning.

Understanding Online Learners

In an early effort to deconstruct disengagement, we found four prototypical course trajectories in learner behavior that reflect differences in motivation

We created a measure of enrollment intentions and applied it to study learner behavior and generate design directions, e.g. social spaces outside courses and content modularization.

There are substantial gender and geographical achievement gaps in MOOCs. Insufficient time is the main reason for leaving, partly due to time mismanagement.

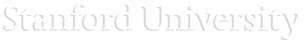

Superposters are frequent posters who enhance the quality of course forum conversations and don't suppress less prolific contributors. Encouraging forum participation works better with neutral than collectivist or individualist framings.

Learning in groups can be an opportunity to experience cultural diversity. A design experiment shows positive effects of culturally diverse learner groups.

Peer grading accuracy can be improved through smart grader assignments and bias correction.

The trajectories of student work as they solve richly structured assignments contain patterns that reflect how teachers would suggest individuals make forward progress.

A dropout predictor succeeds in flagging 40%-50% of dropouts while they are still active and another 40%-45% within 14 days of leaving the course. Targeted surveys provide stop-out specific feedback.

An analysis of video viewing habits in 2 blended CS courses found 2-3x longer viewing times than typical for MOOCs.

Evaluating Digital Instruction

Assessment with big data, or Data-Enriched Assessment, should be continuous, feedback-oriented, and multifaceted.

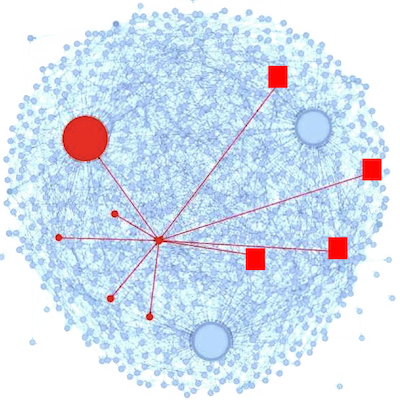

Blended Engineering Courses: We surveyed students in several blended graduate engineering classes to understand their experience and identify critical design features.

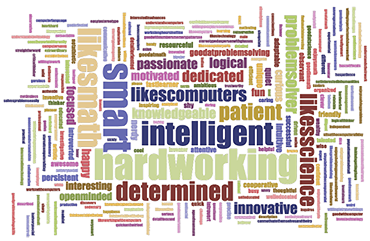

Analysis of syntactic and functional differences among one million programming assignment submissions reveals a small number of solution approaches, enabling widely relevant instructor feedback.

Instructor dashboards improve student learning in a middle school Computer Science class.

Encouraging positive perceptions and behavior in online teams through interventions can counterintuitively result in learners leaving their teams. Follow-up studies on team persistence examine effects of being assigned to a team, group composition effects, and the effect of being appointed a leader.

Explain why: Prompting explanations of beliefs can help clear up misconceptions.

When given a choice, 2/3rds of learners watch videos with the instructor's face, though 1/3rd prefer not to see it. A strategic presentation of the face increases attrition for some learners, but learning outcomes remain constant. An eye-tracking experiment shows that the face attracts significant visual attention. Results of a lab experiment suggest that a social robot could take the instructor's place if designed right.

Building Learning Tools

Lacuna Stories is a social reading platform that encourages annotation and connecting fragments of texts. We study how it supports learners and instructors in hybrid courses through platform data, student and faculty interviews, and classroom observations.

PeerStudio is a tool that facilitates rapid peer feedback that is shown to improve performance.

Talkabout enables large online classes to create small video discussion groups. These globally diverse groups improve student achievement, and help students form global friendships.

Codewebs is a search engine over learners' coding homework submissions. Associated indexing enables retrieval of common mistakes, and consequent directed feedback.

Student code submissions can be assessed using deep learning to propagate human comments and provide feedback at scale.

Forum post classifier: Posts are labeled along multiple dimensions (e.g. confusion). Using closed caption information, the system can then guide learners towards relevant content that is otherwise difficult to access.

Ongoing Research

Psychological interventions (e.g., self-affirmation or mental contrasting with implementation intentions) that support online learners in general and close global achievement gaps in MOOCs.

Developing a new approach to Knowledge Tracing using recurrent Neural Networks.

An interactive learning tool for teaching theorem proofing.

Robotic simulation for teaching choreography online.

Interactive simulations and accompanying short online learning modules for core topics in engineering, starting with mass spring systems to teach differential equations.

Phoenix Corps: An interdisciplinary effort to create an online graphic novel to teach classical thermodynamics in a narrative-driven way.

An infrastructure for custom learning tools in existing platforms to collect data across platforms and analyze it with alternative processing models.

Looking beyond MOOCs, we will engage in interdisciplinary use-inspired basic research on human learning in a diversity of digital learning environments. We will create interventions that simultaneously support improved learning and produce data from which we can build contextualized explanatory models of human learning. To support this research, we will continue to build new tools and methodologies for designing learning environments, conducting learning research, and modeling data.

Resources for Research

Stanford Vice Provost for Teaching and Learning's (VPTL) online instructional offerings are delivered through three platforms: Lagunita (a local instance of OpenEdX); Coursera; and NovoEd. Fine-grained data for research originate from all three platforms. We have also produced a dataset for 30,000 anonymized forum posts from Stanford online courses that are manually labeled on several dimensions to support new research. For details, see Stanford Datastage.

To manage the technical demands of a data-driven science of learning, we have created a data collection infrastructure that converts often inscrutable platform exports into formats that are amenable to analysis with common statistical software packages. In addition to making these data available to Stanford researchers, we are able to share Lagunita data collected after June 14, 2014 with researchers at other institutions. We consider requests for data shares from researchers beyond Stanford for purposes of non-proprietary academic inquiry. Applications may be submitted at the CAROL website.

Stanford has been an active driver of national conversations about ethical dimensions of academic research with online learning data. Our research activity is guided by the Asilomar Convention for Learning Research in Higher Education. Our intramural research and external data shares are overseen by a Data Policy Working Group, which works in concert with Stanford’s VPTL and Office of General Counsel.